Teaching an Old Dog New Tricks

Reinforcement Learning, Proximal Policy Optimzation, Legged Locomotion, Simulation

View on GitHubOverview

.gif)

Faster!

This project aims to train the Unitree Go2 Robot dog to perform a variety of tasks including walking, running, jumping, strafing, and crawling on rough terrain. To train the policy, we use an Actor-Critic network structure, integrated into a Proximal Polixy Optimzation (PPO) algorithm. To simulate this policy, we used a lightweight, new physics simulator called Genesis, which is greatfor training locomotion policies on legged robots. Sim-to-real is still in the works for this project, but we are hoping to have it working soon!

Hardware

Unitree Go2 Dog

The Unitree Go2 Robot Dog is a quadpred (of course) that has three joints per leg. This creates an action space of 16 possible actions which is the output of the actor network. The go2's urdf is open-source, and is what we use to visualize it in the environment. For a baseline, we start with the Genesis example with the go2 walking on a flat plane.

Proximal Policy Optimization

PPO starts by intializing the actor and critic networks. Each of the inputs are the observation space while the output is the number of actions and the expected reward function respectively. After sampling an action from the outputted distribution, the critic network predicts the expected reward and the experiences are stored. These steps are repeated as a type of "data collection" phase. When the model has enough data, we can begin to learn a policy. We calculate new actions and evaluate the state space. A policy ratio is then computed to give the probability of the action being chosen for the current policy. This ratio is used in the surrogate loss function, which also includes an advantages term to see how much better the current policy is from the last. Finally, we intergrate the "proximal" portion of the algorithm by clipping the value between 1-ε and 1+ε so the policy does not stray too far. This concludes the PPO algorithm, you can find the equations below.

Objective Function

\[ L^{CLIP}(\theta) = \mathbb{E}_t \left[ \min \left( r_t(\theta) \hat{A}_t, \text{clip}(r_t(\theta), 1 - \epsilon, 1 + \epsilon) \hat{A}_t \right) \right] \] where:

- \( r_t(\theta) = \frac{\pi_\theta(a_t|s_t)}{\pi_{\theta_{\text{old}}}(a_t|s_t)} \) is the probability ratio,

- \( \hat{A}_t \) is the advantage estimate at time \( t \),

- \( \epsilon \) is a hyperparameter (e.g., 0.2) that controls the clipping range.

Advantage Estimation

\[ \hat{A}_t = \sum_{l=0}^{\infty} (\gamma \lambda)^l \delta_{t+l} \] where:

- \( \gamma \) is the discount factor,

- \( \lambda \) is the GAE parameter,

- \( \delta_t = r_t + \gamma V(s_{t+1}) - V(s_t) \) is the TD residual.

Value Function Loss (MSE)

\[ L^{VF}(\theta) = \mathbb{E}_t \left[ \left( V_\theta(s_t) - V_t^{\text{target}} \right)^2 \right] \] where \( V_t^{\text{target}} \) is the target value (e.g., discounted return).

Entropy Bonus

\[ L^{ENT}(\theta) = \mathbb{E}_t \left[ -\pi_\theta(a_t|s_t) \log \pi_\theta(a_t|s_t) \right] \]

Total Loss

\[ L^{TOTAL}(\theta) = L^{CLIP}(\theta) - c_1 L^{VF}(\theta) + c_2 L^{ENT}(\theta) \]

Reward Function Engineering

To make create an optimal policy for each task, we need to create custom reward functions that encourage the correct behavior. For example, a common running gait for a robot quadpred is the diagonal gait, where the front right leg and the back rear leg move together, and vice versa. To achieve this, a custom reward function was needed to penalize FL and FR correlation. Below is a list that includes, but isnt limited to, custom reward functions that were added for different tasks.

Forward Velocity

Aligned Hips

Base height

Action Rate

Simulation

Genesis Simulator

To simulate the go2 interacting with the environment, we used a platform called Genesis,

which is

a lightweight physics simulator perfect for training locomotion policies for legged robots.

As

said earlier, we use the open-source unitree go2 urdf to model the robot, and a flat plane

to

perform various tasks on (although some tasks have different terrain). By interacting with

this

environment, our robot gave record state spaces, rewards, and other experiences.

The algorithm used is the PPO (Proximal Policy Optimization) algorithm. In a nutshell,

This algorithm takes in the observation space as an input to a neural network, and outputs

a probability of likely actions to maxmize the reward. There is also another network that

has the

same input, and output the likely reward to be receieved. This Actor-Critic relationship

trains the hexapod

to walk!

Results

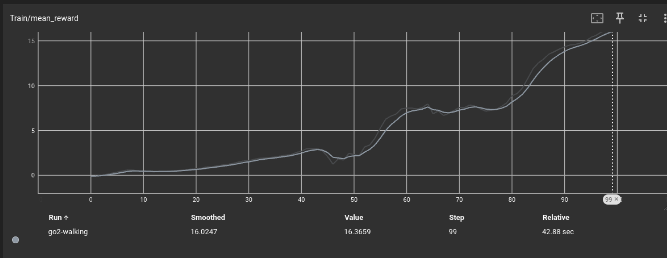

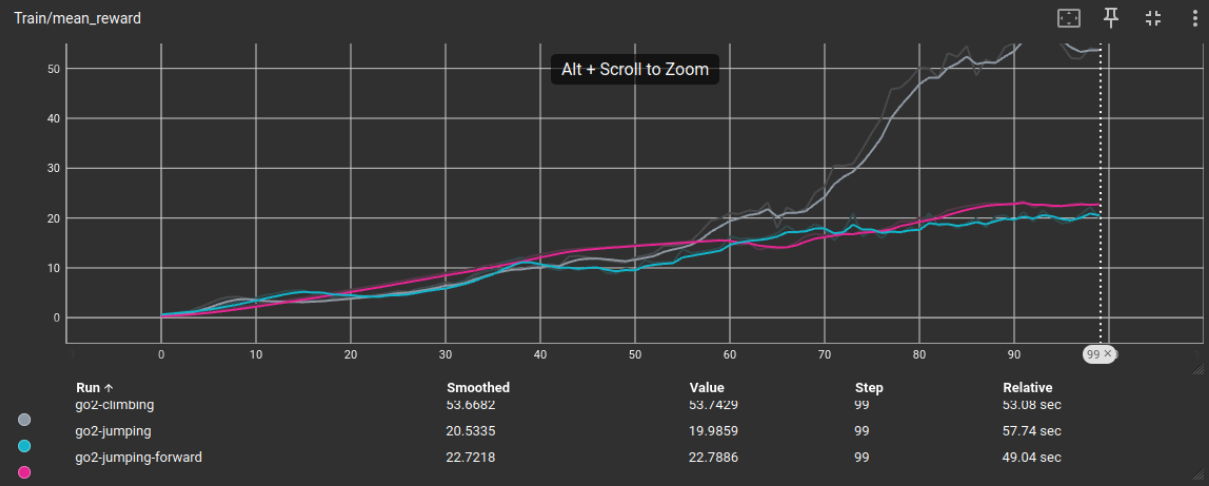

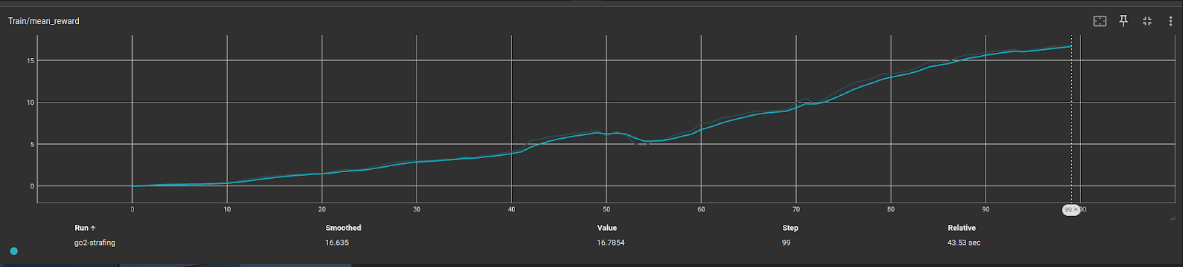

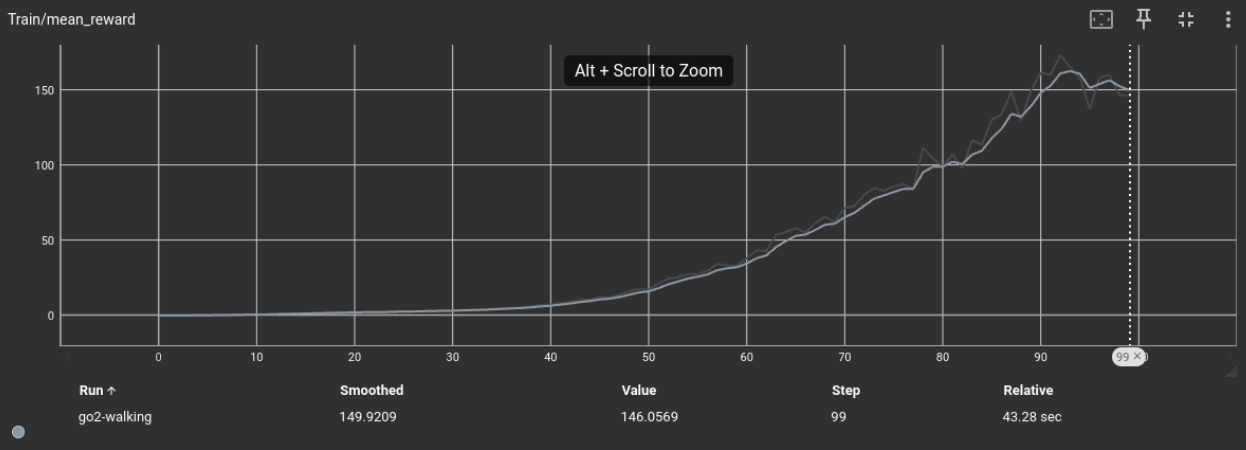

Our results focus on mean rewards per episode, as we found that this metric most accurately encapsulates the success of the policy.

Baseline Results

Walking Simulation

Walking: ~16

Our Results

Climbing Simulation

Jumping Simulation

Jumping Forward Simulation

Climbing: ~53, Jumping: ~20, Jumping Forward: ~22,

Strafing Simulation

Strafing: ~16

.gif)

Running Simulation

Running: ~146